I had a need to develop a capability within Titan Class Vision to composite many images representing parts of our planet at different resolutions. In particular, these images could be quite large; perhaps 200MB each.

I decided to give memory mapped files a go using the low-level paging mechanisms available to modern operating systems. In a nutshell we’re looking at the use of mmap and munmap. Memory mapping files is very fast and highly optimised - it is by far the fasted way of reading bytes in to memory from disk.

My main image of the planet is about 200MB in size. What I do is have a Windows BMP file mapped saved in BGRAUnsignedInt8888Rev form (this is the optimal format for something to be textured on Mac OS X) and then use memory mapped file IO to page the BMP into memory. I then have an image compositor object that is able to composite many of these images e.g. I have a BMP for the entire planet, and one for just a given state of Australia (NSW). When it comes to client code making a request the compositor assembles a composited buffer of my layers as required and in the resolution required. This composited buffer is then sent to a texture using GL_STORAGE_SHARED_APPLE as an optimisation. I also keep some of these textures around for situations where I know in advance that I'm going to zoom in on something; it is then consequently very fast when it comes to rendering as no dynamic composition is required.

Oh, and I'm using the Mac OS X Accelerate Framework for high quality and high performance scaling given that Titan Class Vision targets this platform.

It all works pretty well and is fast, even on a tired old G4 Powerbook. Multiprocessors are utilised given the Accelerate Framework. One naturally has to be considerate of virtual memory usage with the compositor, but that is a resource management exercise.

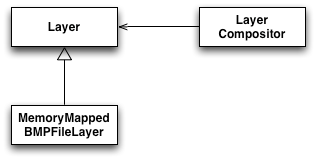

Here’s the class structure that I came up with; feel free to use it in your own work but please be kind and include a reference to this page and some credits.

namespace WorldLayerCompositor {

typedef unsigned long BGRA;

inline unsigned short SwapInt16LittleToHost(

unsigned short arg

) throw();

inline unsigned long SwapInt32LittleToHost(

unsigned long arg

) throw();

class Layer {

public:

virtual ~Layer();

inline void GetCentreLatLong(

double& outLat, double& outLong

) const throw();

inline double GetResolution() const throw();

inline void GetSizePx(

unsigned& outWidthPx,

unsigned& outHeightPx

) const throw();

virtual BGRA* GetBuffer() const throw() = 0;

};

class MemoryMappedBMPFileLayer : public Layer {

public:

MemoryMappedBMPFileLayer(

const std::string& inFile

) throw();

virtual ~MemoryMappedBMPFileLayer();

virtual BGRA* GetBuffer() const throw();

};

class LayerCompositor {

public:

LayerCompositor() throw();

typedef std::list<boost::shared_ptr<Layer> >

LayerList;

LayerList::iterator AddLayer(

boost::shared_ptr<Layer> inLayerP

) throw();

void RemoveLayer(

const LayerList::iterator& inLayerIter

) throw();

bool GetNextSubBuffer(

unsigned long** inBGRAUnsignedInt8888RevPP,

unsigned& outSubXPx,

unsigned& outSubXPy,

unsigned& outSubWidthPx,

unsigned& outSubHeightPx

) const throw();

void GetEffectiveSizePx(

unsigned& outWidthPx,

unsigned& outHeightPx

) const throw();

inline void SetSubRegion(

double inLat, double inLong,

double inResolution,

unsigned inWidthPx, unsigned inHeightPx

) throw();

};

};

I intend to evolve this class much further and make it considerate of planet related things. For example if a request is presently made for a region that extends over the dateline then I move the region back either east or west. In the future I’ll be handling this so that the compositing considers the dateline.

Another thought is to be able to add layers described in vector terms using SVG and GML... I think that there are some interesting possibilities.